Last modified: Jan 31 2026 at 10:09 PM • 9 mins read

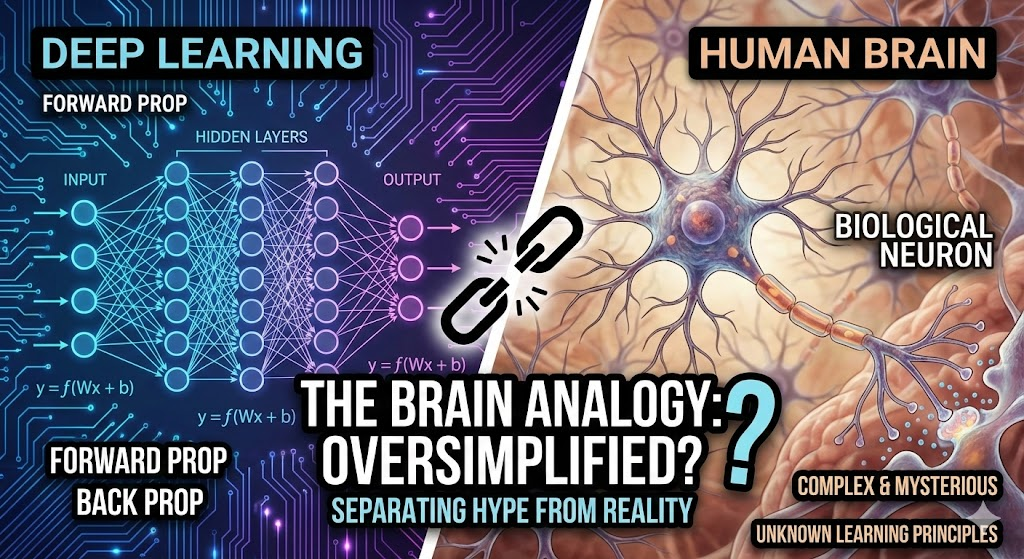

What does this have to do with the brain?

Table of contents

- Introduction

- The Honest Answer

- Why the Brain Analogy Persists

- The Biological Neuron vs Artificial Neuron

- What We Don’t Know About the Brain

- The Limited Analogy

- How to Think About Deep Learning Today

- Domain-Specific Inspiration

- The Evolution of the Analogy

- Practical Implications

- The Future

- Key Takeaways

Introduction

You’ve learned how to implement forward and backward propagation for deep neural networks. Now let’s address a common question: What does deep learning have to do with the human brain?

Short answer: Not as much as the name “neural network” suggests!

This lesson clarifies:

- Why the brain analogy exists (and why it’s appealing)

- What artificial neurons actually do vs biological neurons

- Why the analogy is oversimplified

- When the analogy is (and isn’t) useful

- How to think about deep learning today

The Honest Answer

The Punchline First

Deep learning has very little to do with how the human brain actually works.

Despite the name “neural networks” and the terminology we use:

- Neurons

- Layers

- Activation

- Connections

The actual algorithms we implement (forward prop, backprop, gradient descent) are mathematical optimization techniques, not biological processes.

Why the Brain Analogy Persists

Reason 1: Simplicity and Seductiveness

When you implement a neural network, you write:

\[Z^{[l]} = W^{[l]} A^{[l-1]} + b^{[l]}\] \[A^{[l]} = g^{[l]}(Z^{[l]})\]The challenge: Explaining what these equations really do—learning complex functions through optimization—is difficult.

The temptation: Saying “it works like the brain” is:

- Simple to understand

- Intuitive for non-experts

- Exciting for popular imagination

- Media-friendly for news articles

Problem: This oversimplification obscures what’s actually happening and creates misconceptions.

Reason 2: Historical Origins

The original perceptron (1950s-1960s) was explicitly inspired by biological neurons:

- Takes multiple inputs

- Weights them

- Produces an output if threshold exceeded

This initial inspiration gave us the terminology, but the field has evolved far beyond this simple model.

Reason 3: Public Appeal

The brain analogy captures public imagination:

Headlines that work:

- “AI that thinks like a human brain!”

- “Machines learning the way you do!”

- “Computer with artificial neurons!”

Reality: Mathematical function approximators optimized with calculus

The gap between these is significant!

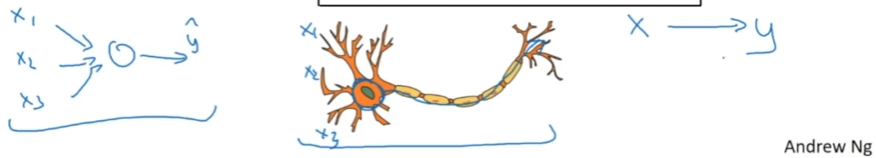

The Biological Neuron vs Artificial Neuron

Biological Neuron (Actual Brain Cell)

How it works (simplified):

- Input: Receives electrical/chemical signals from other neurons

- Integration: Combines signals (not just linear sum!)

- Threshold: If combined signal exceeds threshold, neuron “fires”

- Output: Sends electrical pulse down axon to other neurons

Key characteristics:

- Chemical and electrical signaling

- Complex temporal dynamics (timing matters!)

- Non-linear integration mechanisms

- Adaptive thresholds

- Multiple neurotransmitters

- Synaptic plasticity (connections change)

Artificial Neuron (In Neural Network)

Inputs: x₁, x₂, x₃

↓ ↓ ↓

Weights: w₁, w₂, w₃

↓ ↓ ↓

Linear: z = Σ(wᵢxᵢ) + b

↓

Activation: a = g(z)

↓

Output: a

How it works:

\[z = w_1 x_1 + w_2 x_2 + w_3 x_3 + b\] \[a = g(z)\]Where $g$ might be sigmoid, ReLU, or tanh.

Key characteristics:

- Simple weighted sum

- Deterministic activation function

- No temporal dynamics (timing ignored)

- No chemical processes

- Fixed computational model

- Parameters updated via gradient descent

The Comparison

| Aspect | Biological Neuron | Artificial Neuron |

|---|---|---|

| Inputs | 1,000-10,000 synapses | Typically 10-1,000 |

| Processing | Complex, poorly understood | Simple: $z = Wx + b$, $a = g(z)$ |

| Timing | Critical (millisecond precision) | Ignored (static computation) |

| Plasticity | Continuous adaptation | Discrete updates (gradient steps) |

| Energy | Extremely efficient (~20W for brain) | Power-hungry (GPUs use 100s of watts) |

| Speed | Slow (~1-100 Hz firing rate) | Fast (billions of ops/sec) |

| Understood? | Very little | Completely |

Key insight: Even neuroscientists don’t fully understand what a single biological neuron does!

What We Don’t Know About the Brain

Mystery 1: Single Neuron Complexity

Current knowledge: A biological neuron is far more complex than our models suggest.

Recent neuroscience discoveries:

- Neurons have internal compartments with different functions

- Dendrites (input branches) perform computations themselves

- Non-linear signal integration in dendrites

- Hundreds of ion channels with complex dynamics

- Multiple types of neurotransmitters with different effects

Reality check: A single biological neuron may be as complex as an entire small neural network!

Mystery 2: Learning Mechanisms

Question: How does the brain learn?

Honest answer: We don’t really know.

What we know it’s NOT:

- Almost certainly not backpropagation (no mechanism for it)

- Almost certainly not gradient descent (no global cost function)

Possibilities being researched:

- Local learning rules (Hebbian: “neurons that fire together wire together”)

- Spike-timing-dependent plasticity (STDP)

- Reward-based learning (dopamine signals)

- Predictive coding

- Some completely unknown mechanism

Key point: Whether the brain uses anything resembling backpropagation remains an open question in neuroscience.

Mystery 3: Network Organization

The brain’s architecture is nothing like our feed-forward networks:

Artificial networks:

Input → Layer 1 → Layer 2 → ... → Layer L → Output

(feed-forward, clean hierarchy)

Brain:

Massive recurrent connections

Feedback loops everywhere

Hierarchical AND lateral connections

Dynamic routing of information

Attention mechanisms

Working memory

The brain is vastly more complex than our simplified architectures.

The Limited Analogy

Where the Analogy Holds (Weakly)

Similarity 1: Computation from inputs

Both take inputs and produce outputs:

| Artificial | Biological |

|---|---|

| $a = \sigma(w^T x + b)$ | Neuron fires based on inputs |

Similarity 2: Thresholding

Both have activation thresholds:

| Artificial | Biological |

|---|---|

| ReLU: $\max(0, z)$ | Fire only if threshold exceeded |

Similarity 3: Distributed representation

Both use multiple units working together:

| Artificial | Biological |

|---|---|

| Many neurons per layer | Many neurons per brain region |

Where the Analogy Breaks Down

Difference 1: Learning mechanism

| Artificial | Biological |

|---|---|

| Backpropagation + gradient descent | Unknown (likely very different) |

Difference 2: Computation style

| Artificial | Biological |

|---|---|

| Synchronous, layer-by-layer | Asynchronous, massively parallel |

Difference 3: Temporal dynamics

| Artificial | Biological |

|---|---|

| Static (timing irrelevant) | Dynamic (timing is everything) |

Difference 4: Energy efficiency

| Artificial | Biological |

|---|---|

| Requires huge power (GPUs) | Human brain: ~20 watts total |

Difference 5: Generalization

| Artificial | Biological |

|---|---|

| Needs millions of examples | Humans learn from few examples |

How to Think About Deep Learning Today

The Modern Perspective

Deep learning: A mathematical framework for learning complex functions from data using gradient-based optimization.

What deep learning is GOOD for:

- Learning flexible functions: $f: X \rightarrow Y$

- Input-output mappings: Supervised learning tasks

- Pattern recognition: Images, speech, text

- Function approximation: Any smooth function

- Feature learning: Automatic representation discovery

What makes it work:

- Universal approximation: Neural networks can approximate any function

- Gradient descent: Efficient optimization algorithm

- Backpropagation: Efficient way to compute gradients

- Big data: Lots of examples to learn from

- Computational power: GPUs make training feasible

Not because it mimics the brain!

A Better Mental Model

Instead of thinking:

“Neural networks work like the brain”

Think:

“Neural networks are function approximators optimized with calculus and linear algebra”

The process:

- Data: $(x^{(1)}, y^{(1)}), (x^{(2)}, y^{(2)}), \ldots, (x^{(m)}, y^{(m)})$

- Model: $\hat{y} = f(x; W, b)$ (parameterized function)

- Loss: $\mathcal{L}(\hat{y}, y)$ (how wrong are we?)

- Optimization: Adjust $W, b$ to minimize $\mathcal{L}$ using gradients

- Result: Function that maps $x \rightarrow y$ accurately

This is mathematical optimization, not biological simulation.

Domain-Specific Inspiration

Computer Vision: More Brain-Inspired

Observation: Computer vision has taken slightly more inspiration from neuroscience than other fields.

Examples:

- Hierarchical processing

- Brain: V1 → V2 → V4 → IT cortex

- CNNs: Conv1 → Conv2 → Conv3 → FC layers

- Local receptive fields

- Brain: Neurons respond to local image regions

- CNNs: Convolutional filters operate locally

- Feature hierarchy

- Brain: Edges → shapes → objects → concepts

- CNNs: Low-level → mid-level → high-level features

But even here: The implementation details are completely different!

Other Domains: Less Brain Connection

| Domain | Brain Inspiration | Actual Implementation |

|---|---|---|

| NLP | Minimal | Transformers, attention (not brain-like) |

| Speech | Some (auditory cortex) | Signal processing + deep learning |

| Reinforcement Learning | Some (dopamine, rewards) | Bellman equations, Q-learning |

| Time Series | Minimal | RNNs, LSTMs (mathematical constructs) |

The Evolution of the Analogy

Historical Perspective

1950s-1960s: Strong brain inspiration

- Perceptrons explicitly modeled after neurons

- Enthusiasm about “thinking machines”

1980s-1990s: Mathematical focus

- Backpropagation derived from calculus

- Universal approximation theorems

- Focus on optimization

2000s-2010s: Engineering success

- Deep learning works on practical problems

- Brain analogy revived for marketing

- But practitioners know it’s optimization

2020s (Today): Pragmatic view

- Brain analogy used for intuition only

- Research focuses on what works, not biological plausibility

- Neuroscience and AI are separate (though interacting) fields

Personal Perspective (from Andrew Ng)

“When I think of deep learning, I think of it as being very good at learning flexible, complex functions—learning $X$ to $Y$ mappings in supervised learning.”

“The brain analogy maybe was useful once, but I think the field has moved to the point where that analogy is breaking down.”

“I tend not to use that analogy much anymore.”

This is the expert view: The brain analogy is historical, not technical.

Practical Implications

What This Means for You

When learning deep learning:

- ✅ Do: Focus on the mathematics

- Understand forward propagation (function composition)

- Understand backpropagation (chain rule)

- Understand gradient descent (optimization)

- ✅ Do: Think in terms of functions

- Neural networks learn $f: X \rightarrow Y$

- Layers compose functions

- Training finds good parameters

- ❌ Don’t: Get distracted by brain analogies

- They don’t help you implement better models

- They don’t explain why things work

- They can be misleading

- ❌ Don’t: Expect biological plausibility

- We’re not simulating brains

- We’re solving engineering problems

- Different goals entirely

When the Analogy Is Useful

The brain analogy can help with:

High-level intuition:

- “Multiple processing units working together”

- “Hierarchical feature learning”

- “Distributed representations”

Communication with non-experts:

- Explaining what neural networks are

- Generating interest and excitement

- Avoiding heavy mathematics

But always clarify: It’s a loose metaphor, not a technical description!

The Future

Diverging Paths

Neuroscience and AI are increasingly separate fields with different goals:

Neuroscience goals:

- Understand how biological brains work

- Explain consciousness, memory, cognition

- Medical applications (treating brain disorders)

Deep learning goals:

- Build practical systems that work

- Maximize performance on specific tasks

- Scale to large datasets efficiently

Overlap: Minimal and shrinking.

Potential Reconnection

Possible future:

- If we really understand how the brain learns, we might borrow those principles

- Brain-inspired algorithms might be more efficient

- But we’re nowhere near that understanding yet

Current reality: Deep learning advances through:

- Engineering innovations

- Mathematical insights

- Empirical experimentation

Not through neuroscience discoveries.

Key Takeaways

- Deep learning ≠ brain: Despite the name, neural networks are mathematical optimization tools, not brain simulations

- Oversimplified analogy: The brain comparison is seductive but misleading

- Biological neurons are complex: Far more sophisticated than our models

- Learning is mysterious: We don’t know how the brain learns (probably not backprop!)

- Artificial neurons are simple: Just $z = Wx + b$ and $a = g(z)$

- No temporal dynamics: Our networks ignore timing (crucial in brains)

- Unknown learning mechanism: Brain likely doesn’t use gradient descent

- Energy efficiency: Brain uses ~20W, GPUs use 100s of watts

- Different organization: Brain has massive recurrence, feedback, and dynamics

- Think mathematically: Focus on function approximation and optimization

- Computer vision exception: Slightly more brain-inspired than other domains

- Historical artifact: Brain analogy comes from 1950s-1960s origins

- Modern perspective: Practitioners don’t rely on brain analogy

- Separate fields: Neuroscience and AI have different goals

- What matters: Deep learning works because of math, data, and compute—not biological plausibility!